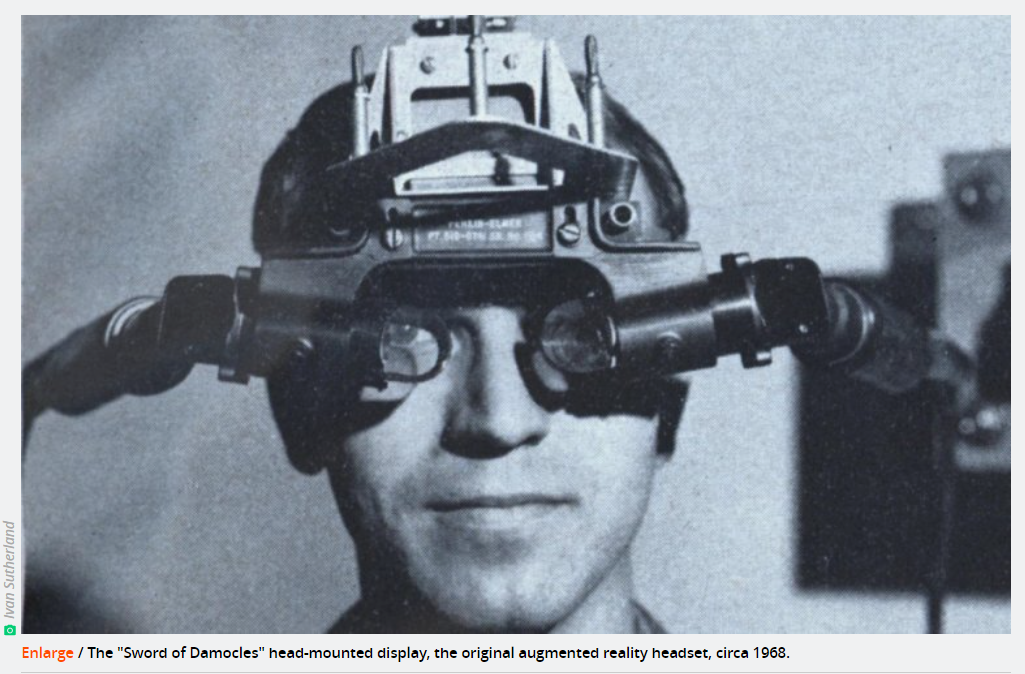

“Amazon plans to join other tech giants like Apple, Google, and Meta in building its own mass-market augmented reality product, job listings discovered by Protocol suggest.”

“The numerous related jobs included roles in computer vision, product management, and more. They reportedly referenced ‘XR/AR devices’ and ‘an advanced XR research concept.’ Since Protocol ran its report on Monday, several of the job listings referenced have been taken down, and others have had specific language about products removed.”

“Google, Microsoft, and Snap have all released various AR wearables to varying degrees of success over the years, and they seem to be still working on future products in that category. Meanwhile, it's one of the industry's worst-kept secrets that Apple employs a vast team of engineers, researchers, and more who are working on mixed reality devices, including mass-market consumer AR glasses. And Meta (formerly Facebook) has made its intentions to focus on AR explicitly clear over the past couple of years.”

"It's not all that surprising that Amazon is chasing the same thing. As Protocol notes, Amazon launched a new R&D group led by Kharis O'Connell, an executive who has previously worked on AR products at Google and elsewhere.”

“But Amazon's product might not be the same kind of product that we know Meta and Apple have focused on; it might not be a wearable at all. Some of Amazon's job listings refer to it as a "smart home" device. And Amazon is among the tech companies that have experimented with room-scale projection and holograms instead of wearables for AR.”